Description

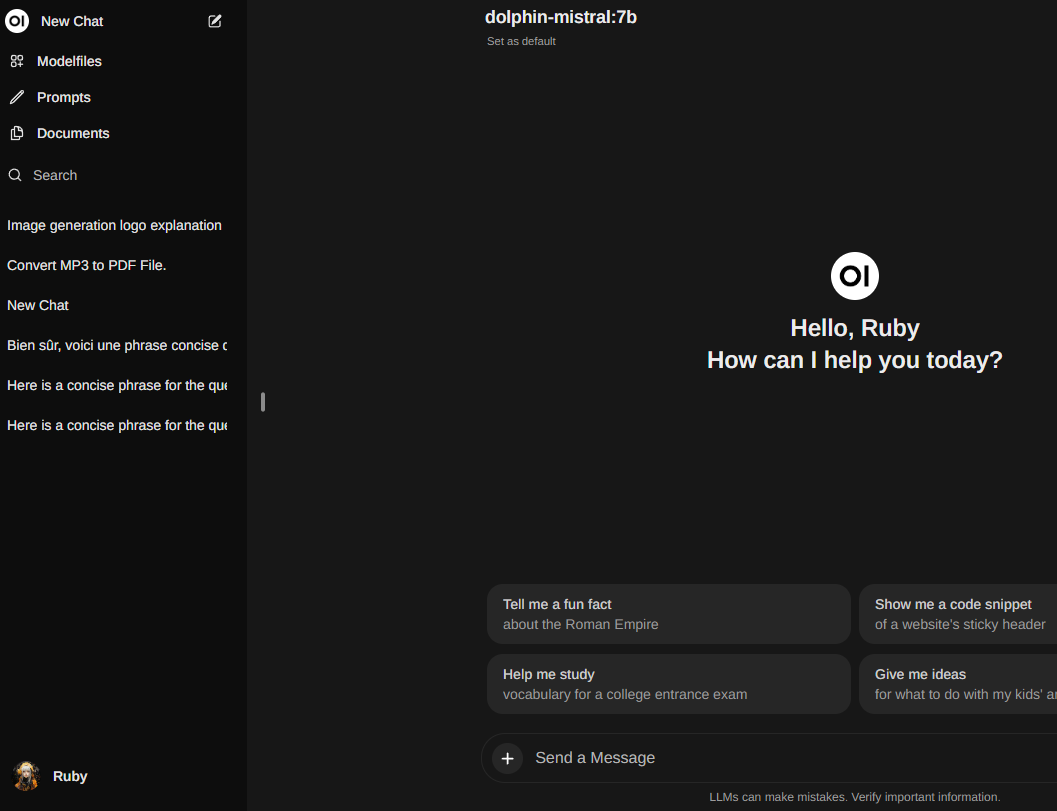

Ollama is a lightweight and extensible application designed to build and run language models locally on your machine. It is based on the llama.cpp library, which provides a simple and efficient API for creating, executing, and managing models. Ollama also includes a library of pre-trained models, such as Llama 2, Mistral, Gemma, and many others, which can be easily integrated into a wide range of applications. This project demonstrates a focus on local AI deployment, offering flexibility, privacy, and performance without relying on cloud-based infrastructure.